Maximilian Filtenborg

Maximilian Filtenborg

A FastAPI Python project template with CI/CD, Docker, a Makefile, unit & integration testing and linting.

FastAPI is a very popular lightweight framework to set up API’s in Python. It has been gaining popularity steadily over the last few years, which can be contributed to both the ease of implementation and the integration with the data validation and type annotation package Pydantic. This makes manually validating user data a thing of the past. However, coding up your endpoints is still only a small part of creating production-ready API’s. In this blog post we share an opensource template for setting up FastAPI projects, which is the result of building and deploying several FastAPI projects over the years.

You can find the template project at the following link: https://github.com/BiteStreams/fastapi-template.

This template has the following benefits:

By using Docker the development & deployment of the code is much more seamless, and has numerous advantages with some added complexity. Docker enables a lightweight reproducible environment for running your code & tests, as you can make your environment scriptable. Virtualization is of course not a new idea (Vagrant, VirtualBox, etc), but Docker is a much more lightweight technology, which allows a new paradigm of for example: ‘test’ containers. Test Containers are very powerful, as the lightweight Docker containers allow one to build a fleet of containers purely for running various suites of tests.

In this template, we have set up two test suites, a unit test suite and an integration test suite (for more hefty projects more could be added, like an end-to-end test suite). Both are dockerized, which makes these as reproducible as possible, while still being performant. Since the tests are containerised, it is always possible to check out the project and immediately run all the test suites, using make test.

This is where Make comes in, the reason why this great is that it is a very intuitive entrypoint into the application. Make allows us to forget about all the specific arguments (setup) required to run the tests, and instead allows us to only focus on what we want to achieve, which is running the tests. Similarly, this also applies for other commands that every project typically has: make up, which starts the application stack.

By keeping these commands consistent, it becomes very easy to switch between projects and also onboard new people onto a project. Onboarding can often be a long process when, for example, a specific set of tools are required with specific software versions, which should be run with specific arguments, this solution aims to solve that pain point.

Another advantage of using Docker & Make arises when we look at building CI/CD pipelines. CI/CD pipelines have numerous advantages, like shorter cycle times, fewer bugs in the code, and faster development. In the template we have already included a (very simple) CI pipeline to run the tests in the project, and ‘lint’ the code.

Since the make command works out of the box, we can actually directly use it in our CI pipeline! This is great, as we often see that CI/CD pipelines require very specific shell scripting to get everything to work. This has the disadvantage that it is not reproducible to how a developer works: as he or she cannot run the GitHub Actions locally (at the time of writing there are some options available, but are not fit for this use-case). As an extra added benefit, this avoids vendor lock in by not using tricks from your specific CI/CD vendor.

As shown below, the CI pipeline is set up with just one command, make (and a dependency on Docker).

|

|

Make sure you have the following requirements installed:

To get started, simply clone the project (replace my-project with a name of your choosing):

|

|

Optional: To install a local copy of the Python environment (to get code-completion in your editor for example) you can install the poetry environment (given you have the correct Python version and poetry installed) with poetry install.

Now enter the project folder from the commandline, all commands need to be run from the root of the project. The Makefile is the ‘entrypoint’ for the tools in the application, such that you can easily run different commands without remembering the exact arguments, run:

$ make help

To get an overview of the available commands:

1up # Run the application

2done: lint test # Prepare for a commit

3test: utest itest # Run unit and integration tests

4check # Check the code base

5lint # Check the code base, and fix it

6clean_test # Clean up test containers

7migrations # Generate a migration using alembic

8migrate # Run migrations upgrade using alembic

9downgrade # Run migrations downgrade using alembic

10help # Display this help message

The commands listed are up, done etc, which you can call with the make tool.

Now, we start up the API using the up command:

|

|

Then to update the schema using alembic, in another shell:

|

|

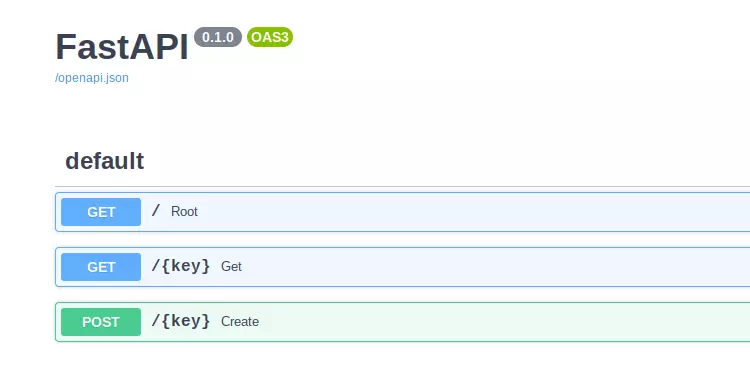

That’s it, now the app is running and is reachable on localhost:5000/docs.

If you go to this url, you should see the API documentation:

Code changes are automatically detected using a Docker volume.

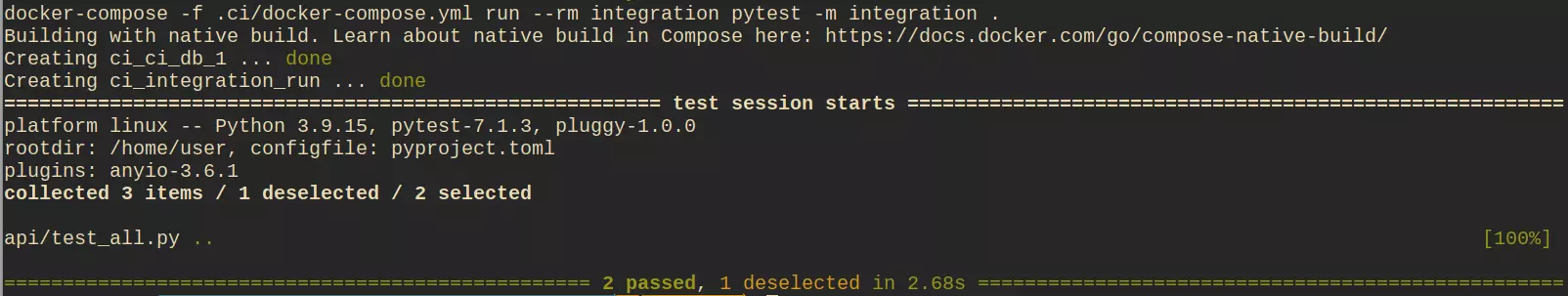

You can run tests using the make utest and make itest commands.

The tests are containerized and the Docker setup can be found in the .ci/ folder. They are written using Pytest. You can run the tests using:

|

|

This runs the integration & unit tests. If you want to run the unit and integration tests separately, use make itest to run the integration tests and make utest to run the unit tests.

You will notice that make itest is significantly slower, as it also starts up a PostgreSQL database container for the tests.

Since most projects require a relational db, we have decided to include one out of the box. Since the database is inside a Docker container, it is launched automatically when you start the application. There are also included commands to run and create migrations using alembic.

Code interaction with postgreSQL is done using sqlalchemy, which is an industry standard.

We also have a blog post that gives 10 Tips for adding SQLAlchemy to FastAPI,

that describes some implementation choices.

To migrate your database to the latest schema run:

|

|

To create a new migration after you made some schema changes run, add a message to clarify what was changed:

|

|

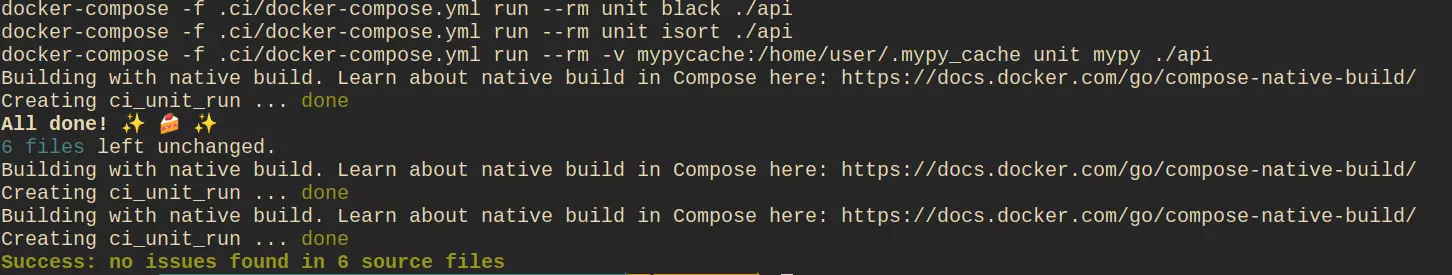

To make sure that everyone writes code that is nicely and consistently formatted, it is a good choice to use linting tools to make your life easier. This allows a team (when coding and doing code reviews) to focus on what the code is doing, instead of whether there is an appropriately made line break at the end of the file or not.

To lint (check) the code for ‘errors’ again you simply run:

|

|

This checks the code with the following criteria:

If any of these tools fail the command exits with an exit code of 1.

To automatically fix the errors from black and isort, you run:

|

|

It can be nice to add a git pre-commit hook with

It can be nice to add a git pre-commit hook with make lint in it, or add it as a hook in your IDE of choice.

Black is configured with a ‘line_length’ of 120, as this is what we like. You can configure all these tools in the pyproject.toml file in the root dir.

Any serious project nowadays has CI (& CD) pipelines, and this one does to!

The pipeline is implemented with GitHub Actions and the setup is very simple, as it re-uses the tools that we were using earlier.

As the code is configured by default, the CI pipeline runs whenever you push to the main branch, you can change this easily in the code-integration.yml file inside the .github/workflows folder.

The CI pipeline runs make test and make check, just as you would do locally. This ensures that the code is properly tested and linted.

We hope this template can help people in their FastAPI projects! This structure has helped us get up and running in several of our projects, and has enabled us to quickly onboard people to new projects. The great thing about this kind of structure is that the Makefile structure is not FastAPI or even Python dependent. We have, for example, used the same Makefile + Docker structure for some of our Golang projects.

Originally this template was made for a presentation on unit-testing which we did at the SharkTech talks at Start Up Village (SUV) in Amsterdam.

Maximilian is a machine learning enthusiast, experienced software engineer, and co-founder of BiteStreams. In his free-time he listens to electronic music and is into photography.

Read moreEnjoyed reading this post? Check out our other articles.

Maximilian Filtenborg

Maximilian Filtenborg

Maximilian Filtenborg

Maximilian Filtenborg

Donny Peeters

Donny Peeters

Get more data-driven with BiteStreams, and leave the competition behind.

Contact us