Donny Peeters

Donny Peeters

Introducing a relational database such as PostgreSQL to FastAPI the proper way as a data engineer.

The popularity of FastAPI has been steadily growing over the last few years. It is climbing the top 25 most popular web frameworks and technologies in the Stack Overflow Developer Survey 2022, right behind Flask and Django (but beating both in Loved vs. Dreaded). In an age where data and AI are claiming a bigger part of any business, it is no surprise there is a lot of interest in the lightweight, performant API framework for Python. And you only need a few lines of code to get started:

|

|

FastAPI’s usage among data scientists and engineers is growing as we speak. The framework being so minimalistic might tempt you to believe there is not a lot of room for error. But complexity is incremental. Eventually these API’s will have to be updated, extended and maintained. In that case, you are better off having read The Art of Computer Programming than The Art of Statistics. Just thinking about the poor excuse for an ETL pipeline I hacked together years ago using Pandas makes me shiver to this day.

Whether you use FastAPI in a large web app or a small ML inference service, chances are want to track metrics, save information or add some users at some point. Therefore, I want to share some learnings from having added a relational database to FastAPI using SQLAlchemy multiple times in the past. I bundled these learnings into 10 practical tips for integrating SQLAlchemy with your FastAPI application. The code samples can be seen in action in our FastAPI Template Project.

SQLAlchemy provides a core framework for communicating with a database, as well as an ORM. Everyone Python developer that has worked with a relational database is probably familiar with SQLAlchemy. When you decided to integrate with FastAPI however, it is important to keep clear separations of concern. In this section, we introduce a few abstractions we have found to be helpful for both maintaining and testing the code.

SQLAlchemy ORM uses what is called the data mapper pattern. The data mapper pattern is great for its flexibility, and attempts to bridge the Object–relational impedance mismatch. But there are disadvantages to using these ORM models everywhere in your application, in particular your API. What if you want to keep the endpoints simple to users, but have a highly normalized data model or want to hide fields to users? You might end up adding awkward logic to create contextual awareness of the fields. Or worse: your API will be hard to use. Hence, you should consider evolving your API and database schema separately.

|

|

In particular, you should not try to create a class that is both a Pydantic BaseModel and a declarative base from SQLAlchemy. Pydantic is above all an input validation library, so it should be used to validate user input in the API. Above, we see an example of a model for which both a Pydantic BaseModel is created for the API and a SQLAlchemy model. You only need to translate between these objects when entering your domain layer. The next tip tells you where to do that.

Being able to swap components in your software is a good indicator that it is modular. And although you are probably not switching databases anytime soon, being able to swap persistence with in-memory structures both allows you to postpone introducing new entities (or even a whole database), as well as make it easyy to write unit tests. It requires creating an interface around the storage layer and all query logic. Enter the Repository Pattern.

|

|

The Repository Pattern can be found in books such as Domain Driven Design. For our purpose, repositories are intermediaries that store the entities in our domain model. A repository typically has methods such as save(), get_by_id() or get_all_enabled(). The signatures of these methods consist of domain models and query parameters to filter or paginate the response. They do not expose how they persist entities, only which entities they persist.

The interface of a repository can be implemented using SQLAlchemy, but also just by using a dictionary of objects to use in unit tests.

One pattern I personally found to be very helpful is the use of Query Objects. Since you often want to query your database using several filters, collecting them in a Query Object allows you to add or remove filter parameters, change defaults filter values and even reuse filters without changing a Repository’s interface. It also keeps the interface clean as it reduces the amount of arguments to the repository methods.

|

|

We have all written functions with too many arguments at some point; a code-smell suggesting you might want to introduce a new object. In the case of repositories, it’s often a Query Object you need.

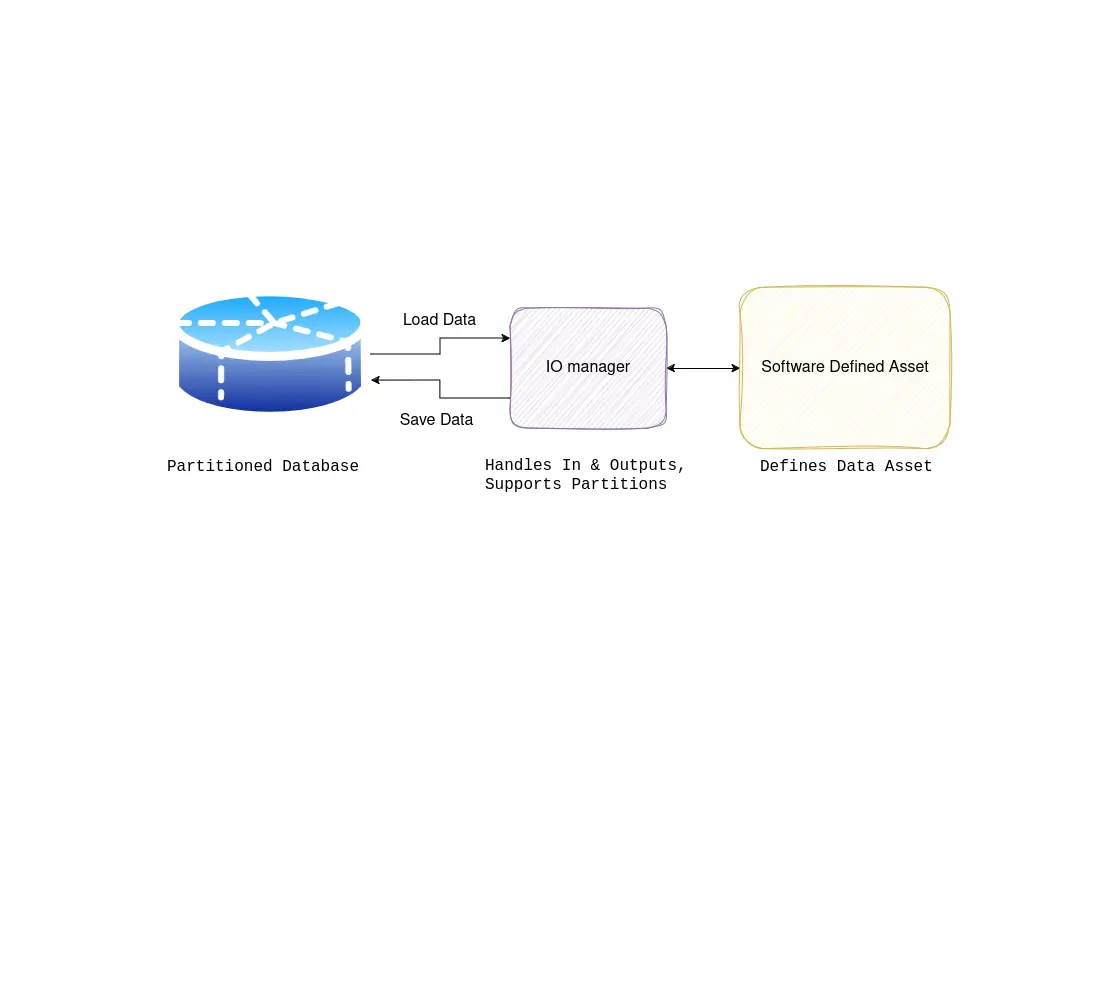

Both FastAPI and SQLAlchemy have their own documentation on how to add a database using depends (FastAPI) and managing connections, sessions and transactions, etc. (SQLAlchemy). But the former works with a rather succinct setup, while the latter is a very detailed explanation of how to work with SQLAlchemy in all its glory. Here, we show a complete yet simple setup that should do the trick for most API’s. This leverages SQLAlchemy’s different scopes without coupling implementation details to your domain.

As every documentation page will tell you, a big anti-pattern is not re-using database connections. They are expensive to set up, so reconnecting for every transaction introduces significant overhead. The FastAPI documentation shows you how you could approach this using global variables. Another way to deal with this is caching the function that initialises the engine (the object managing the connection pool).

|

|

This way you re-use the engine between request and only pay for the connections on the first one. More FastAPI-specific implementations create and close these objects using lifespan events, or using the new lifespan kwarg to the FastAPI app object:

|

|

However, for SQLAlchemy this does not mean that you pay for connections only on startup, as the documentation explains:

All SQLAlchemy pool implementations have in common that none of them “pre create” connections - all implementations wait until first use before creating a connection.

The second layer of bookkeeping is the managing of sessions. Sessions are not expensive to create. They form a layer between the database that performs transactions, identity mapped objects etc. SQLAlchemy has its own documentation on sessions, where they define the transaction scope and the session scope. In short, in the case of API’s it is good practice to create one session per request.

To manage a sessions life cycle, we can leverage FastAPI’s Depends by creating an iterator.

|

|

The FastAPI endpoint to create a Todo would then simply read:

|

|

Every time the endpoint is called, a repository is instantiated with a new session that is automatically closed after the request ends. Even when an exception occurs, we rollback the session.

The last thing to manage is individual transactions. Sometimes, one transaction per request offers enough granularity. In that case, committing the session right before closing in the create_todo_repository function does the trick. When you want to commit transactions in the service layer, repositories need a way to construct transactions without introducing a leaky abstraction (by exposing the session). Luckily, context managers are a great solution for this.

To make a context manager out of a repository, you need to implement an __enter__() and an __exit__() method. We can commit the session when exiting the context. The __exit__() even allows you to handle any exceptions raised in the current context, so you can safely rollback any pending transactions before exiting.

|

|

You are most likely going to change your database schema at one point. Alembic has been the standard schema management solution for SQLAlchemy. (They were also developed by the same author!)

The great thing about repositories is that they are easy to test. You should check if they save and return the right entities, perform the right filtering and they do not accept invalid values. If you test your repositories well, it gives you a lot of comfort writing more involved business logic since you know that you are at least using the right entities. If you have in-memory implementations (and perhaps add ContractTests for your repositories as well), you can even swap the SQL implementations for these lightweight test doubles to unit test business logic.

While we are at it, we might as well test our migrations by using Alembic in our test setup. This ensures you do not forget to generate and commit your migrations, since your tests will fail without them.

|

|

Before diving into SQLAlchemy, here are two tips that help make the right considerations when you have not bitten the bullet yet about introducing SQLAlchemy.

Everything is a trade-off. And although a big part of the web runs on relational databases, PostgreSQL is not the answer to everything. Does your inference endpoint only need a trained model that updates its version once in a while? Then it is probably enough to add the version number to the models filename. (Python wheels is one of the many examples of how data can be managed largely by a naming convention.) Want to cache some calculations to speed up response times? Check out the functools caching decorators before adding Redis. Even when authentication can be reduced to hashed environment variables if it is only for your launching customer.

On the other hand, you might have performance requirements that can not be met by a relational database that are optimized for OLTP. For OLAP use-cases such as analytics dashboards on large amounts of data, there may be more suitable database technologies (check out Google BigQuery, Amazon Redshift, Snowflake, or even DuckDB!). The amount of reads and writes, the query patterns, and ACID requirements should all be considered. The data field develops quickly and has shown us that there is no one size fits all.

If you postponed adding a relational database long enough, you should take another critical look at your data model. Of course, if you manage the data yourself and it is not mission-critical, you get away with a lot. But the moment you deploy to production and start storing other people’s data, migrations can become a real pain. Classics include creating a nullable column that should have been mandatory, or decreasing VARCHAR lengths. And how normalized should your schema be? Do you create that extra table and sacrifice some query performance, or do you accept the duplication?

Architecture is what’s hard to change, and your database schema is part of it. If you want to avoid common data modeling mistakes, check out my blog post about that as well.

SQLAlchemy and FastAPI are both great Python packages that get you up and running with minimal setup.

In this post we listed several aspects to take into account as you add a relational database to your API and your project matures.

But as mentioned above, everything is a trade-off.

You might find turning on Pydantic’s ORM mode a lot easier to work with and never run into needing more flexibility on both your API and database mapping.

Maybe you want to leave out the context manager and just set autocommit=True while creating a new session. In the end, you know the requirements of your application best.

Having said that, hopefully these tips will help you build a great data API, or at least give food for thought regarding the design principles. If you want to see all the code in this post in action, you can check out our FastAPI Template Project on Github and read our blog post. If you think we missed important tips or you disagree on the ones listed, feel free to reach out! We are always happy to discuss and improve on ideas.

Donny is a mathematician who started freelancing as a software/data engineer before co-founding BiteStreams. He can quickly spot design quirks and adapt systems to business needs. In his free-time he likes to work out, read and grab a drink with friends.

About usEnjoyed reading this post? Check out our other articles.

Donny Peeters

Donny Peeters

Maximilian Filtenborg

Maximilian Filtenborg

Maximilian Filtenborg

Maximilian Filtenborg

Get more data-driven with BiteStreams, and leave the competition behind.

Contact us